| Skip Navigation Links | |

| Exit Print View | |

|

STREAMS Programming Guide Oracle Solaris 11.1 Information Library |

| Skip Navigation Links | |

| Exit Print View | |

|

STREAMS Programming Guide Oracle Solaris 11.1 Information Library |

Part I Application Programming Interface

Communicating With a STREAMS Device

How STREAMS Works--Application Interface

How STREAMS Works at the Kernel Level

Structure of a STREAMS Device Driver

2. STREAMS Application-Level Components

3. STREAMS Application-Level Mechanisms

4. Application Access to the STREAMS Driver and Module Interfaces

7. STREAMS Framework - Kernel Level

8. STREAMS Kernel-Level Mechanisms

11. Configuring STREAMS Drivers and Modules

14. Debugging STREAMS-based Applications

B. Kernel Utility Interface Summary

Developers implementing STREAMS device drivers and STREAMS modules use a set of STREAMS-specific functions and data structures. This section describes some basic kernel-level STREAMS concepts.

The stream head is created when a user process opens a STREAMS device. It translates the interface calls of the user process into STREAMS messages, which it sends to the stream. The stream head also translates messages originating from the stream into a form that the application can process. The stream head contains a pair of queues; one queue passes messages upstream from the driver, and the other passes messages to the driver. The queues are the pipelines of the stream, passing data between the stream head, modules, and driver.

A STREAMS module does processing operations on messages passing from a stream head to a driver or from a driver to a stream head. For example, a TCP module might add header information to the front of data passing downstream through it. Not every stream requires a module. There can be zero or more modules in a stream.

Modules are stacked (pushed) onto and unstacked (popped) from a stream. Each module must provide open(), close(), and put() entries and provides a service() entry if the module supports flow control.

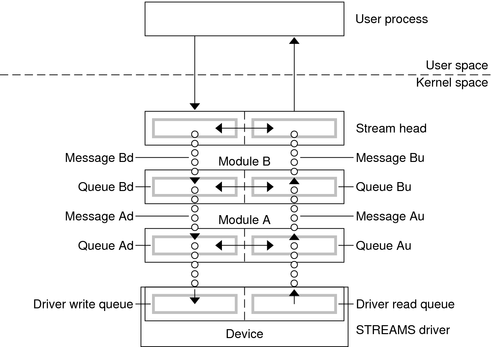

Like the stream head, each module contains a pair of queue structures, although a module only queues data if it is implementing flow control. Figure 1-4 shows the queue structures Au/Ad associated with Module A (“u” for upstream “d” for downstream) and Bu/Bd associated with Module B.

The two queues operate completely independently. Messages and data can be shared between upstream and downstream queues only if the module functions are specifically programed to share data.

Within a module, one queue can refer to the messages and data of the opposing queue. A queue can directly refer to the queue of the successor module (adjacent in the direction of message flow). For example, in Figure 1-4, Au (the upstream queue from Module A) can reference Bu (the upstream queue from Module B). Similarly Queue Bd can reference Queue Ad.

Figure 1-4 Stream in More Detail

Both queues in a module contain messages, processing procedures, and private data.

Blocks of data that pass through, and can be operated on by, a module.

Individual put and service routines on the read and write queues process messages. The put procedure passes messages from one queue to the next in a stream and is required for each queue. It can do additional message processing. The service procedure is optional and does deferred processing of messages. These procedures can send messages either upstream or downstream. Both procedures can also modify the private data in their module.

Data private to the module (for example, state information and translation tables).

Entry points must be provided. The open routine is invoked when the module is pushed onto the stream or the stream is reopened. The close is invoked when the module is popped or the stream is closed.

A module is initialized by either an I_PUSH ioctl(2), or pushed automatically during an open if a stream has been configured by the autopush(1M) mechanism, or if that stream is reopened.

A module is disengaged by close or the I_POP ioctl(2).

STREAMS device drivers are structurally similar to STREAMS modules and character device drivers. The STREAMS interfaces to driver routines are identical to the interfaces used for modules. For instance they must both declare open, close, put, and service entry points.

There are some significant differences between modules and drivers.

A driver:

Must be able to handle interrupts from the device.

Is represented in file system by a character-special file.

Is initialized and disengaged using open(2) and close(2). open(2) is called when the device is first opened and for each reopen of the device. close(2) is only called when the last reference to the stream is closed.

Both drivers and modules can pass signals, error codes, and return values to processes using message types provided for that purpose.

All kernel-level input and output under STREAMS is based on messages. STREAMS messages are built in sets of three:

a message header structure (msgb(9S)) that identifies the message instance.

a data block structure (datab(9S)) points to the data of the message.

the data itself

Each data block and data pair can be referenced by one or more message headers. The objects passed between STREAMS modules are pointers to messages. Messages are sent through a stream by successive calls to the put procedure of each module or driver in the stream. Messages can exist as independent units, or on a linked list of messages called a message queue. STREAMS utility routines enable developers to manipulate messages and message queues.

All STREAMS messages are assigned message types to indicate how they will be used by modules and drivers and how they will be handled by the stream head. Message types are assigned by the stream head, driver, or module when the message is created. The stream head converts the system calls read, write, putmsg, and putpmsg into specified message types, and sends them downstream. It responds to other calls by copying the contents of certain message types that were sent upstream.

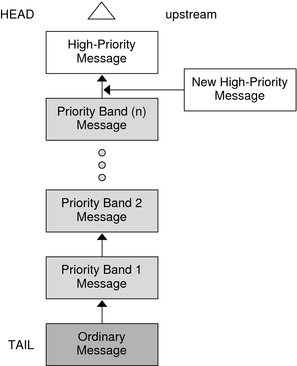

Sometimes messages with urgent information, such as a break or alarm conditions, must pass through the stream quickly. To accommodate them, STREAMS uses message queuing priority, and high-priority message types. All messages have an associated priority field. Normal (ordinary) messages have a priority of zero, while priority messages have a priority band greater than zero. High-priority messages have a high priority by virtue of their message type, are not blocked by STREAMS flow control, and are processed ahead of all ordinary messages on the queue.

Nonpriority, ordinary messages are placed at the end of the queue following all other messages that can be waiting. Priority messages can be either high priority or priority band messages. High-priority messages are placed at the head of the queue but after any other high-priority messages already in the queue. Priority band messages enable support of urgent, expedited data. Priority band messages are placed in the queue in the following order:

after high-priority messages but before ordinary messages.

below all messages that have a priority greater than or equal to their own.

above any messages with a lesser priority.

Figure 1-5 shows the message queueing priorities.

Figure 1-5 Message Priorities

High-priority message types cannot be changed into normal or priority band message types. Certain message types come in equivalent high-priority or ordinary pairs (for example, M_PCPROTO and M_PROTO), so that a module or device driver can choose between the two priorities when sending information.

A queue is an interface between a STREAMS driver or module and the rest of the stream (see queue(9S)). The queue structure holds the messages, and points to the STREAMS processing routines that should be applied to a message as it travels through a module. STREAMS modules and drivers must explicitly place messages on a queue, for example, when flow control is used.

Each open driver or pushed module has a pair of queues allocated, one for the read side and one for the write side. Queues are always allocated in pairs. Kernel routines are available to access each queue's mate. The queue's put or service procedure can add a message to the current queue. If a module does not need to queue messages, its put procedure can call the neighboring queue's put procedure.

The queue's service procedure deals with messages on the queue, usually by removing successive messages from the queue, processing them, and calling the put procedure of the next module in the stream to pass the message to the next queue. Chapter 7, STREAMS Framework - Kernel Level discusses the service and put procedures in more detail.

Each queue also has a pointer to an open and close routine. The open routine of a driver is called when the driver is first opened and on every successive open of the stream. The open routine of a module is called when the module is first pushed on the stream and on every successive open of the stream. The close routine of the module is called when the module is popped (removed) off the stream, or at the time of the final close. The close routine of the driver is called when the last reference to the stream is closed and the stream is dismantled.

Previously, streams were described as stacks of modules, with each module (except the head) connected to one upstream module and one downstream module. While this can be suitable for many applications, others need the ability to multiplex streams in a variety of configurations. Typical examples are terminal window facilities, and internetworking protocols (that might route data over several subnetworks).

An example of a multiplexer is a module that multiplexes data from several upper streams to a single lower stream. An upper stream is one that is upstream from the multiplexer, and a lower stream is one that is downstream from the multiplexer. A terminal windowing facility might be implemented in this fashion, where each upper stream is associated with a separate window.

A second type of multiplexer might route data from a single upper stream to one of several lower streams. An internetworking protocol could take this form, where each lower stream links the protocol to a different physical network.

A third type of multiplexer might route data from one of many upper streams to one of many lower streams.

The STREAMS mechanism supports the multiplexing of streams through special pseudo-device drivers. A user can activate a linking facility mechanism within the STREAMS framework to dynamically build, maintain, and dismantle multiplexed stream configurations. Simple configurations like those shown previously can be combined to form complex, multilevel multiplexed stream configurations.

STREAMS multiplexing configurations are created in the kernel by interconnecting multiple streams. Conceptually, a multiplexer can be divided into two components—the upper multiplexer and the lower multiplexer. The lower multiplexer acts as a stream head for one or more lower streams. The upper multiplexer acts as a device for one or more upper streams. How data is passed between the upper and lower multiplexer is up to the implementation. Chapter 13, STREAMS Multiplex Drivers covers implementing multiplexers.

The Oracle Solaris operating environment kernel is multithreaded to make effective use of symmetric shared-memory multiprocessor computers. All parts of the kernel, including STREAMS modules and drivers, must ensure data integrity in a multiprocessing environment. For the most part, developers must ensure that concurrently running kernel threads do not attempt to manipulate the same data at the same time. The STREAMS framework provides multithreaded (MT) STREAMS perimeters, which provides the developer with control over the level of concurrency allowed in a module. The DDI/DKI provides several advisory locks for protecting data. See Chapter 12, Multithreaded STREAMS for more information.