| Skip Navigation Links | |

| Exit Print View | |

|

Managing Network File Systems in Oracle Solaris 11.1 Oracle Solaris 11.1 Information Library |

| Skip Navigation Links | |

| Exit Print View | |

|

Managing Network File Systems in Oracle Solaris 11.1 Oracle Solaris 11.1 Information Library |

1. Managing Network File Systems (Overview)

2. Network File System Administration (Tasks)

3. Accessing Network File Systems (Reference)

Configuration Files and nfsmapid

Checking for the NFS Version 4 Domain

Configuring the NFS Version 4 Default Domain

Configuring an NFS Version 4 Default Domain in the Oracle Solaris 11 Release

Configuring an NFS Version 4 Default Domain in the Solaris 10 Release

Additional Information About nfsmapid

mount Options for NFS File Systems

Non-File-System-Specific share Options

Setting Access Lists With the share Command

Commands for Troubleshooting NFS Problems

Unsharing and Resharing a File System in NFS Version 4

File-System Namespace in NFS Version 4

Volatile File Handles in NFS Version 4

Client Recovery in NFS Version 4

OPEN Share Support in NFS Version 4

ACLs and nfsmapid in NFS Version 4

Reasons for ID Mapping to Fail

Avoiding ID Mapping Problems With ACLs

Checking for Unmapped User or Group IDs

Additional Information About ACLs or nfsmapid

File Transfer Size Negotiation

Effects of the -public Option and NFS URLs When Mounting

What Is a Replicated File System?

Client-Side Failover in NFS Version 4

How WebNFS Security Negotiation Works

WebNFS Limitations With Web Browser Use

Mounting a File System Using Mirror Mounts

Unmounting a File System Using Mirror Mounts

How Autofs Navigates Through the Network (Maps)

How Autofs Starts the Navigation Process (Master Map)

How Autofs Selects the Nearest Read-Only Files for Clients (Multiple Locations)

Variables in a Autofs Map Entry

Modifying How Autofs Navigates the Network (Modifying Maps)

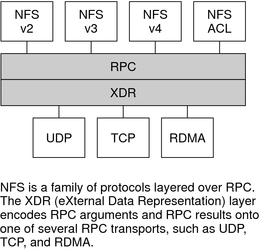

Starting in the Oracle Solaris 11.1 release, the default transport for NFS is the Remote Direct Memory Access (RDMA) protocol, which is a technology for memory-to-memory transfer of data over high-speed networks. Specifically, RDMA provides remote data transfer directly to and from memory without CPU intervention. RDMA also provides direct data placement, which eliminates data copies and, therefore, further eliminates CPU intervention. Thus, RDMA relieves not only the host CPU, but also reduces contention for the host memory and I/O buses. To provide this capability, RDMA combines the interconnect I/O technology of InfiniBand on SPARC platforms with the Oracle Solaris operating system. The following figure shows the relationship of RDMA to other protocols, such as UDP and TCP.

Figure 3-1 Relationship of RDMA to Other Protocols

Because RDMA is the default transport protocol for NFS, no special share or mount options are required to use RDMA on a client or server. The existing automounter maps, vfstab and file system shares, work with the RDMA transport. NFS mounts over the RDMA transport occur transparently when InfiniBand connectivity exists on SPARC platforms between the client and the server. If the RDMA transport is not available on both the client and the server, the TCP transport is the initial fallback, followed by UDP if TCP is unavailable. Note, however, that if you use the proto=rdma mount option, NFS mounts are forced to use RDMA only.

To specify that TCP and UDP be used only, you can use the proto=tcp/udp mount option. This option disables RDMA on an NFS client. For more information about NFS mount options, see the mount_nfs(1M) man page and mount Command.

Note - RDMA for InfiniBand uses the IP addressing format and the IP lookup infrastructure to specify peers. However, because RDMA is a separate protocol stack, it does not fully implement all IP semantics. For example, RDMA does not use IP addressing to communicate with peers. Therefore, RDMA might bypass configurations for various security policies that are based on IP addresses. However, the NFS and RPC administrative policies, such as mount restrictions and secure RPC, are not bypassed.